内容目录

开发环境

| kubernetes版本 | 1.22.7 |

|---|---|

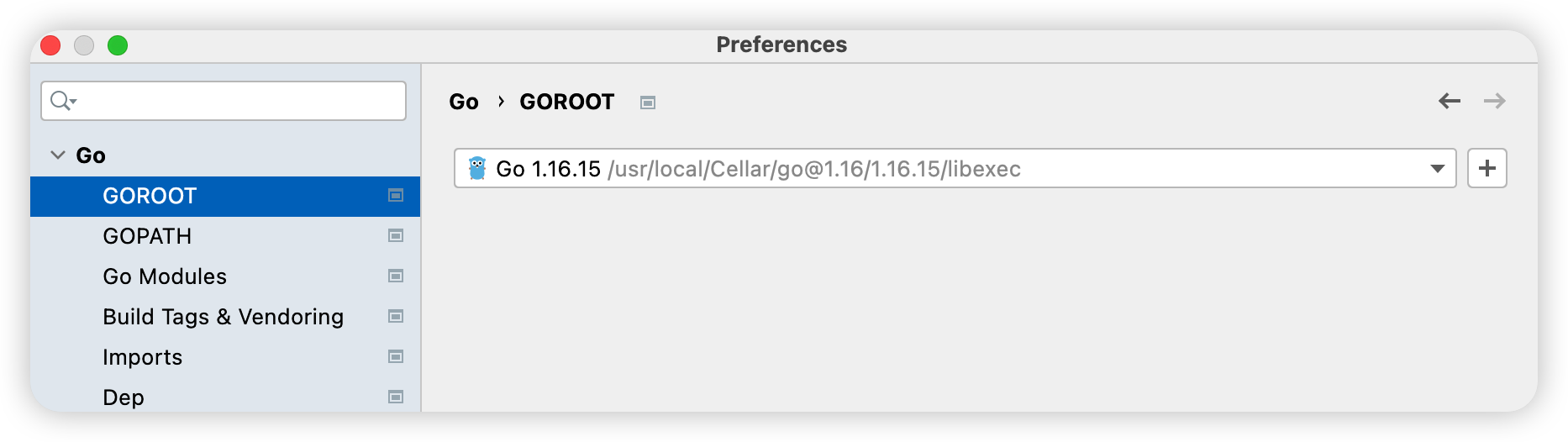

| go | 1.16 |

| ide | goland |

| 集群节点 | 1 master,2 node |

开发

在goland 创建项目test-scheduler

go.mod

从kubernetes1.22.7 获取go.mod 到test-scheduler下

wget https://raw.githubusercontent.com/kubernetes/kubernetes/v1.22.7/go.mod修改module 为test-scheduler

保留require中的

require (

k8s.io/api v0.0.0

k8s.io/apimachinery v0.0.0

k8s.io/component-base v0.0.0

k8s.io/klog/v2 v2.9.0

k8s.io/kubernetes v0.0.0

)保留replace中k8s.io相关的依赖,替换指向到本地kubernetes-1.22.7 目录并在最后增加

k8s.io/kubernetes => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7最后go.mod如

module test-scheduler

go 1.16

require (

k8s.io/api v0.0.0

k8s.io/apimachinery v0.0.0

k8s.io/component-base v0.0.0

k8s.io/klog/v2 v2.9.0

k8s.io/kubernetes v0.0.0

)

replace (

k8s.io/api => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/api

k8s.io/apiextensions-apiserver => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/apiextensions-apiserver

k8s.io/apimachinery => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/apimachinery

k8s.io/apiserver => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/apiserver

k8s.io/cli-runtime => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/cli-runtime

k8s.io/client-go => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/client-go

k8s.io/cloud-provider => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/cloud-provider

k8s.io/cluster-bootstrap => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/cluster-bootstrap

k8s.io/code-generator => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/code-generator

k8s.io/component-base => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/component-base

k8s.io/component-helpers => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/component-helpers

k8s.io/controller-manager => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/controller-manager

k8s.io/cri-api => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/cri-api

k8s.io/csi-translation-lib => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/csi-translation-lib

k8s.io/kube-aggregator => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/kube-aggregator

k8s.io/kube-controller-manager => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/kube-controller-manager

k8s.io/kube-proxy => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/kube-proxy

k8s.io/kube-scheduler => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/kube-scheduler

k8s.io/kubectl => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/kubectl

k8s.io/kubelet => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/kubelet

k8s.io/legacy-cloud-providers => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/legacy-cloud-providers

k8s.io/metrics => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/metrics

k8s.io/mount-utils => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/mount-utils

k8s.io/pod-security-admission => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/pod-security-admission

k8s.io/sample-apiserver => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/sample-apiserver

k8s.io/sample-cli-plugin => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/sample-cli-plugin

k8s.io/sample-controller => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7/staging/src/k8s.io/sample-controller

k8s.io/kubernetes => /Users/chenjie/work/go/src/k8s1/kubernetes-1.22.7

)go版本

为保证编译正常通过,建议在test-scheduler项目下指定go版本和官方go.mod中保持一致

plugins.go

编写调度器插件在项目根目录创建pkg/plugins 文件夹,在内创建plugins.go 文件

package plugins

import (

"context"

v1 "k8s.io/api/core/v1"

"k8s.io/apimachinery/pkg/runtime"

"k8s.io/klog/v2"

"k8s.io/kubernetes/pkg/scheduler/framework"

)

// 插件名称

const Name = "test-plugin"

type Test struct {

handle framework.Handle

}

func (t *Test) Name() string {

return Name

}

func (t *Test) Filter(ctx context.Context, state *framework.CycleState, pod *v1.Pod, nodeInfo *framework.NodeInfo) *framework.Status {

klog.V(3).Infof("filter pod: %v, node: %v", pod.Name, nodeInfo.Node().Name)

return framework.NewStatus(framework.Success, "")

}

func New(_ runtime.Object, f framework.Handle) (framework.Plugin, error) {

return &Test{

handle: f,

}, nil

}main.go

在项目根目录创建main.go

package main

import (

"k8s.io/component-base/logs"

"k8s.io/kubernetes/cmd/kube-scheduler/app"

"math/rand"

"os"

"test-scheduler/pkg/plugins"

"time"

)

func main() {

rand.Seed(time.Now().UTC().UnixNano())

command := app.NewSchedulerCommand(

//注册自定义插件

app.WithPlugin(plugins.Name, plugins.New),

)

logs.InitLogs()

defer logs.FlushLogs()

if err := command.Execute(); err != nil {

os.Exit(1)

}

}scheduler-config.yaml

在根目录创建配置文件scheduler-config.yaml

apiVersion: kubescheduler.config.k8s.io/v1beta2

kind: KubeSchedulerConfiguration

clientConnection:

kubeconfig: /Users/chenjie/.kube/config

leaderElection:

leaderElect: false

profiles:

- schedulerName: test-scheduler #指定调度器名称

plugins:

filter:

enabled:

- name: "test-plugin" #启用插件启动scheduler

go run main.go

--authentication-kubeconfig=/Users/chenjie/.kube/config

--authorization-kubeconfig=/Users/chenjie/.kube/config

--kubeconfig=/Users/chenjie/.kube/config

--config=/Users/chenjie/work/go/src/k8s1/test-scheduler/scheduler-config.yaml

--v=5如果执行有报错缺少依赖模块,执行go mod tidy即可

启动输出

I0308 15:16:37.042413 27469 flags.go:59] FLAG: --add_dir_header="false"

I0308 15:16:37.042547 27469 flags.go:59] FLAG: --address="0.0.0.0"

I0308 15:16:37.042555 27469 flags.go:59] FLAG: --allow-metric-labels="[]"

I0308 15:16:37.042566 27469 flags.go:59] FLAG: --alsologtostderr="false"

I0308 15:16:37.042571 27469 flags.go:59] FLAG: --authentication-kubeconfig="/Users/chenjie/.kube/config"

I0308 15:16:37.042578 27469 flags.go:59] FLAG: --authentication-skip-lookup="false"

I0308 15:16:37.042585 27469 flags.go:59] FLAG: --authentication-token-webhook-cache-ttl="10s"

I0308 15:16:37.042592 27469 flags.go:59] FLAG: --authentication-tolerate-lookup-failure="true"

I0308 15:16:37.042597 27469 flags.go:59] FLAG: --authorization-always-allow-paths="[/healthz,/readyz,/livez]"

I0308 15:16:37.042606 27469 flags.go:59] FLAG: --authorization-kubeconfig="/Users/chenjie/.kube/config"

I0308 15:16:37.042612 27469 flags.go:59] FLAG: --authorization-webhook-cache-authorized-ttl="10s"

I0308 15:16:37.042617 27469 flags.go:59] FLAG: --authorization-webhook-cache-unauthorized-ttl="10s"

I0308 15:16:37.042622 27469 flags.go:59] FLAG: --bind-address="0.0.0.0"

I0308 15:16:37.042629 27469 flags.go:59] FLAG: --cert-dir=""

I0308 15:16:37.042634 27469 flags.go:59] FLAG: --client-ca-file=""

I0308 15:16:37.042639 27469 flags.go:59] FLAG: --config="/Users/chenjie/work/go/src/k8s1/test-scheduler/scheduler-config.yaml"

I0308 15:16:37.042745 27469 flags.go:59] FLAG: --contention-profiling="true"

I0308 15:16:37.042750 27469 flags.go:59] FLAG: --disabled-metrics="[]"

I0308 15:16:37.042758 27469 flags.go:59] FLAG: --experimental-logging-sanitization="false"

I0308 15:16:37.042764 27469 flags.go:59] FLAG: --feature-gates=""

I0308 15:16:37.042772 27469 flags.go:59] FLAG: --help="false"

I0308 15:16:37.042777 27469 flags.go:59] FLAG: --http2-max-streams-per-connection="0"

I0308 15:16:37.042784 27469 flags.go:59] FLAG: --kube-api-burst="100"

I0308 15:16:37.042790 27469 flags.go:59] FLAG: --kube-api-content-type="application/vnd.kubernetes.protobuf"

I0308 15:16:37.042796 27469 flags.go:59] FLAG: --kube-api-qps="50"

I0308 15:16:37.042803 27469 flags.go:59] FLAG: --kubeconfig="/Users/chenjie/.kube/config"

I0308 15:16:37.042808 27469 flags.go:59] FLAG: --leader-elect="true"

I0308 15:16:37.042813 27469 flags.go:59] FLAG: --leader-elect-lease-duration="15s"

I0308 15:16:37.042840 27469 flags.go:59] FLAG: --leader-elect-renew-deadline="10s"

I0308 15:16:37.042848 27469 flags.go:59] FLAG: --leader-elect-resource-lock="leases"

I0308 15:16:37.042852 27469 flags.go:59] FLAG: --leader-elect-resource-name="kube-scheduler"

I0308 15:16:37.042857 27469 flags.go:59] FLAG: --leader-elect-resource-namespace="kube-system"

I0308 15:16:37.042862 27469 flags.go:59] FLAG: --leader-elect-retry-period="2s"

I0308 15:16:37.042867 27469 flags.go:59] FLAG: --lock-object-name="kube-scheduler"

I0308 15:16:37.042873 27469 flags.go:59] FLAG: --lock-object-namespace="kube-system"

I0308 15:16:37.042877 27469 flags.go:59] FLAG: --log-flush-frequency="5s"

I0308 15:16:37.042883 27469 flags.go:59] FLAG: --log_backtrace_at=":0"

I0308 15:16:37.042893 27469 flags.go:59] FLAG: --log_dir=""

I0308 15:16:37.042898 27469 flags.go:59] FLAG: --log_file=""

I0308 15:16:37.042903 27469 flags.go:59] FLAG: --log_file_max_size="1800"

I0308 15:16:37.043289 27469 flags.go:59] FLAG: --logging-format="text"

I0308 15:16:37.043301 27469 flags.go:59] FLAG: --logtostderr="true"

I0308 15:16:37.043306 27469 flags.go:59] FLAG: --master=""

I0308 15:16:37.043312 27469 flags.go:59] FLAG: --one_output="false"

I0308 15:16:37.043317 27469 flags.go:59] FLAG: --permit-address-sharing="false"

I0308 15:16:37.043323 27469 flags.go:59] FLAG: --permit-port-sharing="false"

I0308 15:16:37.043328 27469 flags.go:59] FLAG: --policy-config-file=""

I0308 15:16:37.043332 27469 flags.go:59] FLAG: --policy-configmap=""

I0308 15:16:37.043338 27469 flags.go:59] FLAG: --policy-configmap-namespace="kube-system"

I0308 15:16:37.043342 27469 flags.go:59] FLAG: --port="10251"

I0308 15:16:37.043381 27469 flags.go:59] FLAG: --profiling="true"

I0308 15:16:37.043397 27469 flags.go:59] FLAG: --requestheader-allowed-names="[]"

I0308 15:16:37.043409 27469 flags.go:59] FLAG: --requestheader-client-ca-file=""

I0308 15:16:37.043417 27469 flags.go:59] FLAG: --requestheader-extra-headers-prefix="[x-remote-extra-]"

I0308 15:16:37.043767 27469 flags.go:59] FLAG: --requestheader-group-headers="[x-remote-group]"

I0308 15:16:37.043782 27469 flags.go:59] FLAG: --requestheader-username-headers="[x-remote-user]"

I0308 15:16:37.043795 27469 flags.go:59] FLAG: --secure-port="10259"

I0308 15:16:37.043805 27469 flags.go:59] FLAG: --show-hidden-metrics-for-version=""

I0308 15:16:37.043811 27469 flags.go:59] FLAG: --skip_headers="false"

I0308 15:16:37.043817 27469 flags.go:59] FLAG: --skip_log_headers="false"

I0308 15:16:37.043821 27469 flags.go:59] FLAG: --stderrthreshold="2"

I0308 15:16:37.043827 27469 flags.go:59] FLAG: --tls-cert-file=""

I0308 15:16:37.043832 27469 flags.go:59] FLAG: --tls-cipher-suites="[]"

I0308 15:16:37.043838 27469 flags.go:59] FLAG: --tls-min-version=""

I0308 15:16:37.043842 27469 flags.go:59] FLAG: --tls-private-key-file=""

I0308 15:16:37.043848 27469 flags.go:59] FLAG: --tls-sni-cert-key="[]"

I0308 15:16:37.044082 27469 flags.go:59] FLAG: --use-legacy-policy-config="false"

I0308 15:16:37.044098 27469 flags.go:59] FLAG: --v="5"

I0308 15:16:37.044107 27469 flags.go:59] FLAG: --version="false"

I0308 15:16:37.044120 27469 flags.go:59] FLAG: --vmodule=""

I0308 15:16:37.044125 27469 flags.go:59] FLAG: --write-config-to=""

I0308 15:16:37.483514 27469 serving.go:347] Generated self-signed cert in-memory

I0308 15:16:38.236933 27469 requestheader_controller.go:244] Loaded a new request header values for RequestHeaderAuthRequestController

I0308 15:16:38.342483 27469 configfile.go:96] "Using component config" config="apiVersion: kubescheduler.config.k8s.io/v1beta2nclientConnection:n acceptContentTypes: ""n burst: 100n contentType: application/vnd.kubernetes.protobufn kubeconfig: /Users/chenjie/.kube/confign qps: 50nenableContentionProfiling: truenenableProfiling: truenhealthzBindAddress: 0.0.0.0:10251nkind: KubeSchedulerConfigurationnleaderElection:n leaderElect: falsen leaseDuration: 15sn renewDeadline: 10sn resourceLock: leasesn resourceName: kube-schedulern resourceNamespace: kube-systemn retryPeriod: 2snmetricsBindAddress: 0.0.0.0:10251nparallelism: 16npercentageOfNodesToScore: 0npodInitialBackoffSeconds: 1npodMaxBackoffSeconds: 10nprofiles:n- pluginConfig:n - args:n apiVersion: kubescheduler.config.k8s.io/v1beta2n kind: DefaultPreemptionArgsn minCandidateNodesAbsolute: 100n minCandidateNodesPercentage: 10n name: DefaultPreemptionn - args:n apiVersion: kubescheduler.config.k8s.io/v1beta2n hardPodAffinityWeight: 1n kind: InterPodAffinityArgsn name: InterPodAffinityn - args:n apiVersion: kubescheduler.config.k8s.io/v1beta2n kind: NodeAffinityArgsn name: NodeAffinityn - args:n apiVersion: kubescheduler.config.k8s.io/v1beta2n kind: NodeResourcesBalancedAllocationArgsn resources:n - name: cpun weight: 1n - name: memoryn weight: 1n name: NodeResourcesBalancedAllocationn - args:n apiVersion: kubescheduler.config.k8s.io/v1beta2n kind: NodeResourcesFitArgsn scoringStrategy:n resources:n - name: cpun weight: 1n - name: memoryn weight: 1n type: LeastAllocatedn name: NodeResourcesFitn - args:n apiVersion: kubescheduler.config.k8s.io/v1beta2n defaultingType: Systemn kind: PodTopologySpreadArgsn name: PodTopologySpreadn - args:n apiVersion: kubescheduler.config.k8s.io/v1beta2n bindTimeoutSeconds: 600n kind: VolumeBindingArgsn name: VolumeBindingn plugins:n bind:n enabled:n - name: DefaultBindern weight: 0n filter:n enabled:n - name: NodeUnschedulablen weight: 0n - name: NodeNamen weight: 0n - name: TaintTolerationn weight: 0n - name: NodeAffinityn weight: 0n - name: NodePortsn weight: 0n - name: NodeResourcesFitn weight: 0n - name: VolumeRestrictionsn weight: 0n - name: EBSLimitsn weight: 0n - name: GCEPDLimitsn weight: 0n - name: NodeVolumeLimitsn weight: 0n - name: AzureDiskLimitsn weight: 0n - name: VolumeBindingn weight: 0n - name: VolumeZonen weight: 0n - name: PodTopologySpreadn weight: 0n - name: InterPodAffinityn weight: 0n - name: test-pluginn weight: 0n permit: {}n postBind: {}n postFilter:n enabled:n - name: DefaultPreemptionn weight: 0n preBind:n enabled:n - name: VolumeBindingn weight: 0n preFilter:n enabled:n - name: NodeResourcesFitn weight: 0n - name: NodePortsn weight: 0n - name: VolumeRestrictionsn weight: 0n - name: PodTopologySpreadn weight: 0n - name: InterPodAffinityn weight: 0n - name: VolumeBindingn weight: 0n - name: NodeAffinityn weight: 0n preScore:n enabled:n - name: InterPodAffinityn weight: 0n - name: PodTopologySpreadn weight: 0n - name: TaintTolerationn weight: 0n - name: NodeAffinityn weight: 0n queueSort:n enabled:n - name: PrioritySortn weight: 0n reserve:n enabled:n - name: VolumeBindingn weight: 0n score:n enabled:n - name: NodeResourcesBalancedAllocationn weight: 1n - name: ImageLocalityn weight: 1n - name: InterPodAffinityn weight: 1n - name: NodeResourcesFitn weight: 1n - name: NodeAffinityn weight: 1n - name: PodTopologySpreadn weight: 2n - name: TaintTolerationn weight: 1n schedulerName: test-schedulern"

I0308 15:16:38.344881 27469 server.go:135] "Starting Kubernetes Scheduler version" version="v0.0.0-master+b56e432f2191419647a6a13b9f5867801850f969"

I0308 15:16:38.345031 27469 healthz.go:170] No default health checks specified. Installing the ping handler.

I0308 15:16:38.345053 27469 healthz.go:174] Installing health checkers for (/healthz): "ping"

W0308 15:16:38.346080 27469 authorization.go:47] Authorization is disabled

W0308 15:16:38.346092 27469 authentication.go:47] Authentication is disabled

I0308 15:16:38.346107 27469 deprecated_insecure_serving.go:54] Serving healthz insecurely on [::]:10251

I0308 15:16:38.346128 27469 healthz.go:170] No default health checks specified. Installing the ping handler.

I0308 15:16:38.346135 27469 healthz.go:174] Installing health checkers for (/healthz): "ping"

I0308 15:16:38.377748 27469 requestheader_controller.go:169] Starting RequestHeaderAuthRequestController

I0308 15:16:38.377798 27469 shared_informer.go:240] Waiting for caches to sync for RequestHeaderAuthRequestController

I0308 15:16:38.377807 27469 configmap_cafile_content.go:201] "Starting controller" name="client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file"

I0308 15:16:38.377903 27469 shared_informer.go:240] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0308 15:16:38.377808 27469 configmap_cafile_content.go:201] "Starting controller" name="client-ca::kube-system::extension-apiserver-authentication::client-ca-file"

I0308 15:16:38.378084 27469 shared_informer.go:240] Waiting for caches to sync for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0308 15:16:38.378139 27469 reflector.go:219] Starting reflector *v1.ConfigMap (12h0m0s) from k8s.io/apiserver/pkg/authentication/request/headerrequest/requestheader_controller.go:172

I0308 15:16:38.378169 27469 reflector.go:255] Listing and watching *v1.ConfigMap from k8s.io/apiserver/pkg/authentication/request/headerrequest/requestheader_controller.go:172

I0308 15:16:38.378208 27469 tlsconfig.go:200] "Loaded serving cert" certName="Generated self signed cert" certDetail=""localhost@1683530197" [serving] validServingFor=[127.0.0.1,localhost,localhost] issuer="localhost-ca@1683530197" (2023-03-08 06:16:37 +0000 UTC to 2024-03-07 06:16:37 +0000 UTC (now=2023-03-08 07:16:38.378159 +0000 UTC))"

I0308 15:16:38.378266 27469 reflector.go:219] Starting reflector *v1.ConfigMap (12h0m0s) from k8s.io/apiserver/pkg/server/dynamiccertificates/configmap_cafile_content.go:205

I0308 15:16:38.378301 27469 reflector.go:255] Listing and watching *v1.ConfigMap from k8s.io/apiserver/pkg/server/dynamiccertificates/configmap_cafile_content.go:205

I0308 15:16:38.378351 27469 reflector.go:219] Starting reflector *v1.ConfigMap (12h0m0s) from k8s.io/apiserver/pkg/server/dynamiccertificates/configmap_cafile_content.go:205

I0308 15:16:38.378370 27469 reflector.go:255] Listing and watching *v1.ConfigMap from k8s.io/apiserver/pkg/server/dynamiccertificates/configmap_cafile_content.go:205

I0308 15:16:38.378601 27469 named_certificates.go:53] "Loaded SNI cert" index=0 certName="self-signed loopback" certDetail=""apiserver-loopback-client@1683530198" [serving] validServingFor=[apiserver-loopback-client] issuer="apiserver-loopback-client-ca@1683530197" (2023-03-08 06:16:37 +0000 UTC to 2024-03-07 06:16:37 +0000 UTC (now=2023-03-08 07:16:38.37857 +0000 UTC))"

I0308 15:16:38.378643 27469 secure_serving.go:200] Serving securely on [::]:10259

I0308 15:16:38.378677 27469 tlsconfig.go:240] "Starting DynamicServingCertificateController"

I0308 15:16:38.379005 27469 reflector.go:219] Starting reflector *v1.StatefulSet (0s) from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.379026 27469 reflector.go:255] Listing and watching *v1.StatefulSet from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.379513 27469 reflector.go:219] Starting reflector *v1.ReplicationController (0s) from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.379538 27469 reflector.go:255] Listing and watching *v1.ReplicationController from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.379786 27469 reflector.go:219] Starting reflector *v1.PodDisruptionBudget (0s) from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.379807 27469 reflector.go:255] Listing and watching *v1.PodDisruptionBudget from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.380116 27469 reflector.go:219] Starting reflector *v1.Node (0s) from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.381980 27469 reflector.go:255] Listing and watching *v1.Node from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.380334 27469 reflector.go:219] Starting reflector *v1.CSIDriver (0s) from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.382299 27469 reflector.go:255] Listing and watching *v1.CSIDriver from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.380374 27469 reflector.go:219] Starting reflector *v1.CSINode (0s) from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.382356 27469 reflector.go:255] Listing and watching *v1.CSINode from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.380367 27469 reflector.go:219] Starting reflector *v1.Pod (0s) from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.382403 27469 reflector.go:255] Listing and watching *v1.Pod from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.380652 27469 reflector.go:219] Starting reflector *v1.Namespace (0s) from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.380824 27469 reflector.go:219] Starting reflector *v1.StorageClass (0s) from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.381207 27469 reflector.go:219] Starting reflector *v1.PersistentVolumeClaim (0s) from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.381347 27469 reflector.go:219] Starting reflector *v1.Service (0s) from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.382730 27469 reflector.go:255] Listing and watching *v1.Service from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.382733 27469 reflector.go:255] Listing and watching *v1.Namespace from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.382792 27469 reflector.go:255] Listing and watching *v1.PersistentVolumeClaim from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.382773 27469 reflector.go:255] Listing and watching *v1.StorageClass from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.382755 27469 reflector.go:219] Starting reflector *v1beta1.CSIStorageCapacity (0s) from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.383772 27469 reflector.go:255] Listing and watching *v1beta1.CSIStorageCapacity from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.383848 27469 reflector.go:219] Starting reflector *v1.PersistentVolume (0s) from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.383872 27469 reflector.go:255] Listing and watching *v1.PersistentVolume from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.383957 27469 reflector.go:219] Starting reflector *v1.ReplicaSet (0s) from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.383975 27469 reflector.go:255] Listing and watching *v1.ReplicaSet from k8s.io/client-go/informers/factory.go:134

I0308 15:16:38.442855 27469 node_tree.go:65] Added node "master1" in group "" to NodeTree

I0308 15:16:38.443075 27469 eventhandlers.go:71] "Add event for node" node="master1"

I0308 15:16:38.443154 27469 node_tree.go:65] Added node "node1" in group "" to NodeTree

I0308 15:16:38.443286 27469 eventhandlers.go:71] "Add event for node" node="node1"

I0308 15:16:38.443315 27469 node_tree.go:65] Added node "node2" in group "" to NodeTree

I0308 15:16:38.443376 27469 eventhandlers.go:71] "Add event for node" node="node2"

I0308 15:16:38.478726 27469 shared_informer.go:270] caches populated

I0308 15:16:38.478753 27469 shared_informer.go:247] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file

I0308 15:16:38.478800 27469 shared_informer.go:270] caches populated

I0308 15:16:38.478849 27469 shared_informer.go:247] Caches are synced for RequestHeaderAuthRequestController

I0308 15:16:38.479364 27469 shared_informer.go:270] caches populated

I0308 15:16:38.479388 27469 shared_informer.go:270] caches populated

I0308 15:16:38.479405 27469 shared_informer.go:270] caches populated

I0308 15:16:38.479416 27469 shared_informer.go:270] caches populated

I0308 15:16:38.479427 27469 shared_informer.go:270] caches populated

I0308 15:16:38.479464 27469 shared_informer.go:270] caches populated

I0308 15:16:38.479575 27469 shared_informer.go:270] caches populated

I0308 15:16:38.479592 27469 shared_informer.go:247] Caches are synced for client-ca::kube-system::extension-apiserver-authentication::client-ca-file

I0308 15:16:38.479614 27469 tlsconfig.go:178] "Loaded client CA" index=0 certName="client-ca::kube-system::extension-apiserver-authentication::client-ca-file,client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file" certDetail=""front-proxy-ca" [] validServingFor=[front-proxy-ca] issuer="<self>" (2023-03-13 04:08:36 +0000 UTC to 2033-03-10 04:08:36 +0000 UTC (now=2023-03-08 07:16:38.479575 +0000 UTC))"

I0308 15:16:38.479945 27469 tlsconfig.go:200] "Loaded serving cert" certName="Generated self signed cert" certDetail=""localhost@1683530197" [serving] validServingFor=[127.0.0.1,localhost,localhost] issuer="localhost-ca@1683530197" (2023-03-08 06:16:37 +0000 UTC to 2024-03-07 06:16:37 +0000 UTC (now=2023-03-08 07:16:38.479914 +0000 UTC))"

I0308 15:16:38.480232 27469 named_certificates.go:53] "Loaded SNI cert" index=0 certName="self-signed loopback" certDetail=""apiserver-loopback-client@1683530198" [serving] validServingFor=[apiserver-loopback-client] issuer="apiserver-loopback-client-ca@1683530197" (2023-03-08 06:16:37 +0000 UTC to 2024-03-07 06:16:37 +0000 UTC (now=2023-03-08 07:16:38.48021 +0000 UTC))"

I0308 15:16:38.480409 27469 tlsconfig.go:178] "Loaded client CA" index=0 certName="client-ca::kube-system::extension-apiserver-authentication::client-ca-file,client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file" certDetail=""kubernetes" [] validServingFor=[kubernetes] issuer="<self>" (2023-03-13 04:08:36 +0000 UTC to 2033-03-10 04:08:36 +0000 UTC (now=2023-03-08 07:16:38.48038 +0000 UTC))"

I0308 15:16:38.480463 27469 tlsconfig.go:178] "Loaded client CA" index=1 certName="client-ca::kube-system::extension-apiserver-authentication::client-ca-file,client-ca::kube-system::extension-apiserver-authentication::requestheader-client-ca-file" certDetail=""front-proxy-ca" [] validServingFor=[front-proxy-ca] issuer="<self>" (2023-03-13 04:08:36 +0000 UTC to 2033-03-10 04:08:36 +0000 UTC (now=2023-03-08 07:16:38.480437 +0000 UTC))"

I0308 15:16:38.480733 27469 tlsconfig.go:200] "Loaded serving cert" certName="Generated self signed cert" certDetail=""localhost@1683530197" [serving] validServingFor=[127.0.0.1,localhost,localhost] issuer="localhost-ca@1683530197" (2023-03-08 06:16:37 +0000 UTC to 2024-03-07 06:16:37 +0000 UTC (now=2023-03-08 07:16:38.480707 +0000 UTC))"

I0308 15:16:38.481089 27469 named_certificates.go:53] "Loaded SNI cert" index=0 certName="self-signed loopback" certDetail=""apiserver-loopback-client@1683530198" [serving] validServingFor=[apiserver-loopback-client] issuer="apiserver-loopback-client-ca@1683530197" (2023-03-08 06:16:37 +0000 UTC to 2024-03-07 06:16:37 +0000 UTC (now=2023-03-08 07:16:38.481053 +0000 UTC))"test-deployment.yaml

在根目录创建test-deployment.yaml

指定schedulerName 用于测试test-scheduler的调度效果

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deployment

spec:

replicas: 1

selector:

matchLabels:

app: test-deployment

template:

metadata:

labels:

app: test-deployment

spec:

# 手动指定了一个 schedulerName 的字段,将其设置成上面我们自定义的调度器名称 test-scheduler

schedulerName: test-scheduler

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: nginx

ports:

- containerPort: 80执行

kubectl apply -f test-deployment.yaml观察test-scheduler的命令行输出,可以看到plugins.go:23 的输出表明该pod调度已经经过自定义调度器的调度

I0308 15:26:18.758480 27469 eventhandlers.go:123] "Add event for unscheduled pod" pod="default/test-deployment-7db4867dc8-tslwc"

I0308 15:26:18.758588 27469 scheduling_queue.go:928] "About to try and schedule pod" pod="default/test-deployment-7db4867dc8-tslwc"

I0308 15:26:18.758620 27469 scheduler.go:519] "Attempting to schedule pod" pod="default/test-deployment-7db4867dc8-tslwc"

I0308 15:26:18.758803 27469 plugins.go:23] filter pod: test-deployment-7db4867dc8-tslwc, node: node1

I0308 15:26:18.758803 27469 plugins.go:23] filter pod: test-deployment-7db4867dc8-tslwc, node: node2

I0308 15:26:18.759565 27469 default_binder.go:52] "Attempting to bind pod to node" pod="default/test-deployment-7db4867dc8-tslwc" node="node2"

I0308 15:26:18.785559 27469 cache.go:384] Finished binding for pod 2f950836-ce36-47b4-8c22-3f35eb92934e. Can be expired.

I0308 15:26:18.785685 27469 scheduler.go:675] "Successfully bound pod to node" pod="default/test-deployment-7db4867dc8-tslwc" node="node2" evaluatedNodes=3 feasibleNodes=2

I0308 15:26:18.785970 27469 eventhandlers.go:166] "Delete event for unscheduled pod" pod="default/test-deployment-7db4867dc8-tslwc"

I0308 15:26:18.785993 27469 eventhandlers.go:186] "Add event for scheduled pod" pod="default/test-deployment-7db4867dc8-tslwc"查看pod的事件

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 47s test-scheduler Successfully assigned default/test-deployment-7db4867dc8-tslwc to node2

Normal Pulled 47s kubelet Container image "nginx" already present on machine

Normal Created 47s kubelet Created container nginx

Normal Started 47s kubelet Started container nginx